With deployments of IoT devices and the arrival of 5G fast wireless, placing compute, storage, and analytics close to where data is created is making the case for edge computing.

By Jon Gold and Keith Shaw

Edge computing is transforming how data generated by billions of IoT and other devices is stored, processed, analyzed and transported.

The early goal of edge computing was to reduce the bandwidth costs associated with moving raw data from where it was created to either an enterprise data center or the cloud. More recently, the rise of real-time applications that require minimal latency, such as autonomous vehicles and multi-camera video analytics, are driving the concept forward.

The ongoing global deployment of the 5G wireless standard ties into edge computing because 5G enables faster processing for these cutting-edge, low-latency use cases and applications.

What is edge computing?

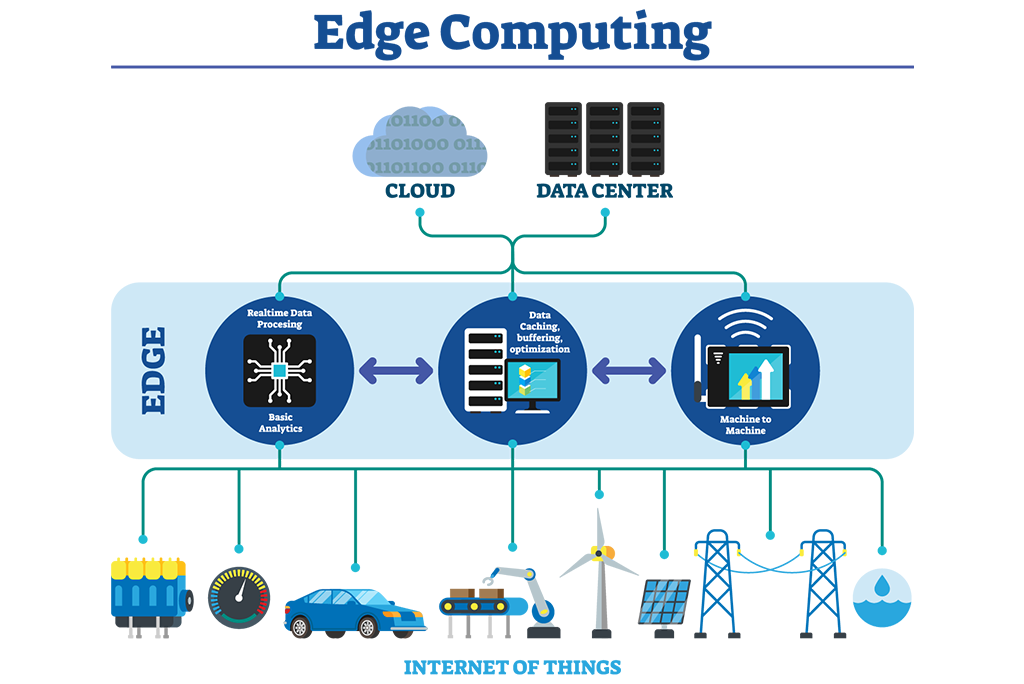

Gartner defines edge computing as “a part of a distributed computing topology in which information processing is located close to the edge—where things and people produce or consume that information.”

At its most basic level, edge computing brings computation and data storage closer to the devices where it’s being gathered, rather than relying on a central location that can be thousands of miles away. This is done so that data, especially real-time data, does not suffer latency issues that can affect an application’s performance. In addition, companies can save money by having the processing done locally, reducing the amount of data that needs to be sent to a centralized or cloud-based location.

Think about devices that monitor manufacturing equipment on a factory floor or an internet-connected video camera that sends live footage from a remote office. While a single device producing data can transmit it across a network quite easily, problems arise when the number of devices transmitting data at the same time grows. Instead of one video camera transmitting live footage, multiply that by hundreds or thousands of devices. Not only will quality suffer due to latency, but the bandwidth costs can be astronomical.

Edge-computing hardware and services help solve this problem by providing a local source of processing and storage for many of these systems. An edge gateway, for example, can process data from an edge device, and then send only the relevant data back through the cloud. Or it can send data back to the edge device in the case of real-time application needs. (See also: Edge gateways are flexible, rugged IoT enablers)

What is the relationship between 5G and edge computing?

While edge computing can be deployed on networks other than 5G (such as 4G LTE), the converse is not necessarily true. In other words, companies cannot really benefit from 5G unless they have an edge computing infrastructure.

“By itself, 5G reduces the network latency between the endpoint and the mobile tower, but it does not address the distance to a data center, which can be problematic for latency-sensitive applications,” says Dave McCarthy, research director for edge strategies at IDC.

Mahadev Satyanarayanan, a professor of computer science at Carnegie Mellon University who first co-authored a paper in 2009 that set the stage for edge computing, agrees. “If you have to go all the way back to a data center across the country or other end of the world, what difference does it make, even if it’s zero milliseconds on the last hop.”

As more 5G networks get deployed, the relationship between edge computing and 5G wireless will continue to be linked together, but companies can still deploy edge computing infrastructure through different network models, including wired and even Wi-Fi, if needed. However, with the higher speeds offered by 5G, particularly in rural areas not served by wired networks, it’s more likely edge infrastructure will use a 5G network.

How does edge computing work?

The physical architecture of the edge can be complicated, but the basic idea is that client devices connect to a nearby edge module for more responsive processing and smoother operations. Edge devices can include IoT sensors, an employee’s notebook computer, their latest smartphone, security cameras or even the internet-connected microwave oven in the office break room.

In an industrial setting, the edge device can be an autonomous mobile robot, a robot arm in an automotive factory. In health care, it can be a high-end surgical system that provides doctors with the ability to perform surgery from remote locations. Edge gateways themselves are considered edge devices within an edge-computing infrastructure. Terminology varies, so you might hear the modules called edge servers or edge gateways.

While many edge gateways or servers will be deployed by service providers looking to support an edge network (Verizon, for example, for its 5G network), enterprises looking to adopt a private edge network will need to consider this hardware as well.

How to buy and deploy edge computing systems

The way an edge system is purchased and deployed can vary widely. On one end of the spectrum, a business might want to handle much of the process on their end. This would involve selecting edge devices, probably from a hardware vendor like Dell, HPE or IBM, architecting a network that’s adequate to the needs of the use case, and buying management and analysis software.

That’s a lot of work and would require a considerable amount of in-house expertise on the IT side, but it could still be an attractive option for a large organization that wants a fully customized edge deployment.

On the other end of the spectrum, vendors in particular verticals are increasingly marketing edge services that they will manage for you. An organization that wants to go this route can simply ask a vendor to install its own hardware, software and networking and pay a regular fee for use and maintenance. IIoT offerings from companies like GE and Siemens fall into this category.

This approach has the advantage of being easy and relatively headache-free in terms of deployment, but heavily managed services like this might not be available for every use case.

What are some examples of edge computing?

Just as the number of internet-connected devices continues to climb, so does the number of use cases where edge computing can either save a company money or take advantage of extremely low latency.

Verizon Business, for example, describes several edge scenarios including end-of-life quality control processes for manufacturing equipment; using 5G edge networks to create popup network ecosystems that change how live content is streamed with sub-second latency; using edge-enabled sensors to provide detailed imaging of crowds in public spaces to improve health and safety; automated manufacturing safety, which leverages near real-time monitoring to send alerts about changing conditions to prevent accidents; manufacturing logistics, which aims to improve efficiency through the process from production to shipment of finished goods; and creating precise models of product quality via digital twin technologies to gain insights from manufacturing processes.

The hardware required for different types of deployment will differ substantially. Industrial users, for example, will put a premium on reliability and low-latency, requiring ruggedized edge nodes that can operate in the harsh environment of a factory floor, and dedicated communication links (private 5G, dedicated Wi-Fi networks or even wired connections) to achieve their goals.

Connected agriculture users, by contrast, will still require a rugged edge device to cope with outdoor deployment, but the connectivity piece could look quite different – low-latency might still be a requirement for coordinating the movement of heavy equipment, but environmental sensors are likely to have both higher range and lower data requirements. An LP-WAN connection, Sigfox or the like could be the best choice there.

Other use cases present different challenges entirely. Retailers can use edge nodes as an in-store clearinghouse for a host of different functionality, tying point-of-sale data together with targeted promotions, tracking foot traffic, and more for a unified store management application.

The connectivity piece here could be simple – in-house Wi-Fi for every device – or more complex, with Bluetooth or other low-power connectivity servicing traffic tracking and promotional services, and Wi-Fi reserved for point-of-sale and self-checkout.

What are the benefits of edge computing?

For many companies, cost savings alone can be a driver to deploy edge-computing. Companies that initially embraced the cloud for many of their applications may have discovered that the costs in bandwidth were higher than expected, and are looking to find a less expensive alternative. Edge computing might be a fit.

Increasingly, though, the biggest benefit of edge computing is the ability to process and store data faster, enabling more efficient real-time applications that are critical to companies. Before edge computing, a smartphone scanning a person’s face for facial recognition would need to run the facial recognition algorithm through a cloud-based service, which would take a lot of time to process. With an edge computing model, the algorithm could run locally on an edge server or gateway, or even on the smartphone itself.

Applications such as virtual and augmented reality, self-driving cars, smart cities and even building-automation systems require this level of fast processing and response.

Edge computing and AI

Companies such as Nvidia continue to develop hardware that recognizes the need for more processing at the edge, which includes modules that include AI functionality built into them. The company’s latest product in this area is the Jetson AGX Orin developer kit, a compact and energy-efficient AI supercomputer aimed at developers of robotics, autonomous machines, and next-generation embedded and edge computing systems.

Orin delivers 275 trillion operations per second (TOPS), an 8x improvement over the company’s previous system, Jetson AGX Xavier. It also includes updates in deep learning, vision acceleration, memory bandwidth and multimodal sensor support.

While AI algorithms require large amounts of processing power that run on cloud-based services, the growth of AI chipsets that can do the work at the edge will see more systems created to handle those tasks.

Privacy and security concerns

From a security standpoint, data at the edge can be troublesome, especially when it’s being handled by different devices that might not be as secure as centralized or cloud-based systems. As the number of IoT devices grows, it’s imperative that IT understands the potential security issues and makes sure those systems can be secured. This includes encrypting data, employing access-control methods and possibly VPN tunneling.

Furthermore, differing device requirements for processing power, electricity and network connectivity can have an impact on the reliability of an edge device. This makes redundancy and failover management crucial for devices that process data at the edge to ensure that the data is delivered and processed correctly when a single node goes down.